If you’re planning to work with AI or high-throughput workloads, the NVMe stack improvements in Windows Server 2025 are worth a look. Microsoft has also introduced a Campus Cluster option for S2D to improve fault tolerance.

There is one thing for sure. Data storage is becoming increasingly important. Also, ultra-fast storage is becoming a necessity with AI workloads and other near real-time storage needs. Windows Server 2025 has many nice new advancements and capabilities. Microsoft has heavily optimized Windows Server 2025 and how it interfaces with NVMe storage. The NVMe stack has been optimized to deliver the most performance to date in Windows Server with NVMe storage. This leads to direct benefits and capabilities, including the new Storage Spaces Direct (S2D) Campus Cluster. Let’s dive in and take a look at the new enhancements.

NVMe is the future

NVMe has certainly taken the data center world by storm. It has transformed the performance of storage and offers high-throughput, low-latency storage for a variety of use cases. The AI revolution is helping to drive the need for fast storage in the data center, along with real-time analytics, and other needs. Traditional storage is not well-equipped to handle the demand from the new server needs.

NVMe (Non-Volatile Memory Express) has transformed enterprise storage by offering high-throughput and low-latency interfaces to solid-state drives. In high-performance computing, AI workloads, real-time analytics, and cloud-scale infrastructure, traditional storage protocols simply can’t keep up.

Windows Server 2025 NVMe enhancements

To meet these new demands head on, Microsoft has introduced new enhancements in the Windows Server 2025 storage stack to help use the performance and capabilities of modern NVMe storage.

According to Akang Basavaju, Product Manager at Microsoft, Windows Server 2025 introduces a new NVMe-optimized storage stack that is capable of unlocking the full potential of PCIe Gen 5 NVMe SSDs. This is allows Windows Server 2025 to have a significant performance leap compared to Windows Server 2022 from Gen 5 NVMe devices. Now with Gen 5, over 3.4 million IOPS (input/output operations per second) in random read performance is possible.

Compared to the earlier generations—Gen 3 SSDs at 1.1 million IOPS and Gen 4 at 1.5 million IOPS—this is a dramatic performance jump. Windows Server 2025 aims to make that performance accessible to customers without requiring specialized tuning or reconfiguration.

Multi-Queue Architecture

Let’s look at one of the central improvements to Windows Server 2025 that provide its improvements in the area of NVMe storage performance. This is the multi-queue (multiQ) architecture. Note the following phases of the new architecture being implemented.

- Initial 2025 Release – Upon its release in late 2024, Windows Server 2025 already had some NVMe optimizations in GA

- Ongoing Improvements – Microsoft has continued updating the IO path to improve parallelization and reduce CPU overhead and these will continue to be rolled out

- MultiQ Architecture – True multiQ support has been introduced in the lower levels of the storage stack. This allows for high concurrency and scaling IOPS to hardware limits.

Microsoft redesigned the lower storage stack which includes the disk and adapter layers. This introduces major improvements while leaving the upper stack (file systems, volume manager, etc.) unchanged. This helps with compatibility and simplicity for IT admins and developers.

NVMe Disk Driver

One of the revamped aspects of the architecture is a new NVMe drivers that takes over from the traditional disk driver for NVMe command mapping. It communicates with the StorePort layer.

Here’s what’s new in the IO path:

- NVMe Disk Driver – This new driver replaces the legacy disk.sys driver for NVMe devices in the system

- Enhanced StorePort Path – A new data path is now optimized for NVMe. It supports both standard and direct queuing according to the NVMe spec

- Updated StoreNVMe and Miniport Drivers – Microsoft will support third-party miniport drivers to leverage the new performance capabilities found as part of the new architecture. This will rely on third-party vendors updating their drivers.

The new functionality and architecture in Windows Server 2025 will help make sure that existing hardware is still supported while allowing dramatic performance gains with modern NVMe storage.

Real-world performance gains

In a live demo at the Windows Server Summit, Microsoft demonstrated comparing two identical systems in a head-to-head shootout. One of the servers was running Windows Server 2022 and the other was running Windows Server 2025 with the new NVMe storage stack. Both systems had four Gen 5 NVMe SSDs installed.

Results from 4K random read testing using Performance Monitor showed the following:

- 80%+ IOPS improvement with the new Windows Server 2025 storage stack over 2022.

- Significant CPU savings per IO, enabling more efficient workloads and reduced energy consumption.

As a test, even when increasing the thread count to stress the system, Windows Server 2025 was able to saturate the SSD hardware’s performance ceiling. This allowed it to reach 13.4 million IOPS across four drives, all the while, it still consumed fewer CPU resources than Windows Server 2022.

Below, you can see a screenshot from the comparison between Windows Server 2022 (left) and Windows Server 2025 (right).

You can imagine in a large data center environment, virtualized data centers or edge deployments where every watt and CPU cycle counts, these kinds of performance gains along with efficiency wins .

Seamless Rollout and Compatibility

Microsoft is taking a careful but practical approach to rolling out the new stack:

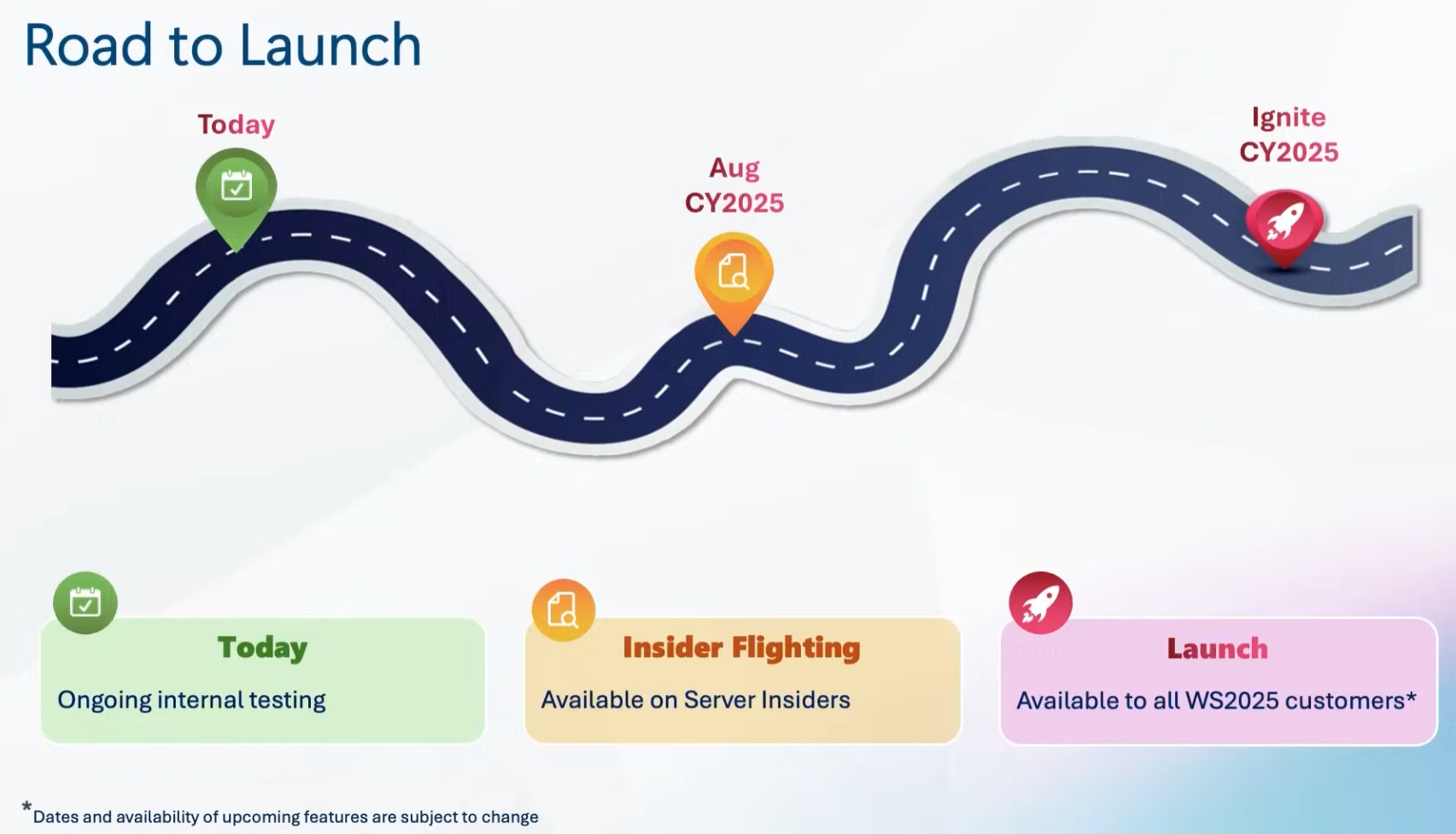

- Insider Preview – Starting in August 2025, the optimized stack will be available in the Windows Server Insider Program for early testing.

- General Availability – By Microsoft Ignite 2025, all Windows Server 2025 customers will receive the new stack as part of a standard OS update.

- Enabled by Default – No configuration changes are required. As long as the system detects NVMe storage, either in bare metal servers or virtual machines, the new path is used.

In-place upgrades will require a reboot for the new stack to activate, but workloads will continue to run on the older stack path until that point. There is no performance hits or risk during the transition.

Keep in mind that systems using third-party miniport drivers will not benefit from the new stack until vendors update their drivers to support the new architecture underneath in Windows Server 2025. This will allow customers to take advantage of the new features in a very non-disruptive way in their environments.

New S2D Campus Cluster

Microsoft has also introduced a new topology with Storage Spaces Direct (S2D) Campus Cluster that is made possible in part by some of the new optimizations with NVMe in Windows Server 2025.

Customers pinpointed a need where additional fault tolerance was needed for S2D clusters across two racks, buildings, or even server rooms within the same physical campus. With this goal in mind, the S2D Campus Cluster has the aim to improve resiliency without introducing any new or complicated network configurations or other tools.

S2D Campus Cluster resiliency and use cases

S2D Campus Cluster extends the existing S2D feature set to support rack-aware resiliency. Note the following enhancements:

- Two-rack topology – You can now have resiliency across two physical racks or buildings using dark fiber or high-speed network connections

- Copy-Mirror volumes – Supports 2-copy or 4-copy mirrored volumes. This helps provide flexibility in configuring data resilience.

- Rack + Node fault tolerance – In a 2+2 configuration (four nodes split between two racks), you can lose an entire rack and still maintain writeable data on the surviving node in the other rack.

This level of redundancy is vital for edge deployments and regulated environments such as:

- Hospitals

- Universities

- Factories and manufacturing floors

- Cruise ships and offshore installations

- Stadiums and venues

- Business parks and corporate campuses

S2D Campus Cluster requirements and recommendations

In the documentation for S2D Campus Cluster, Microsoft outlines a few prerequisites for this configuration and best practices for deploying it in your environment.

- All-Flash Storage – All capacity drives must be flash-based (NVMe or SSD). Spindle disks are not supported.

- Latency – Inter-rack latency needs to be 1ms or less for optimal performance.

- RDMA networking is recommended – RDMA provides faster communication between nodes

- No L3 Switch Required – The topology is simple. There is no need for Storage Replica or additional network layers.

- Recreating storage pools required – Keep in mind that to begin with this topology, customers must recreate the storage pool to configure the new topology.

The new S2D Campus Cluster helps position Windows Server 2025 as a more capable solution for edge and hybrid data centers. It allows creating high availability without the complexity of stretched clusters or SRM type replication.

Wrapping up

For those excited about storage performance, Windows Server 2025 has many different tweaks and architectural changes that provide new storage enhancements with NVMe. Microsoft is incrementally rolling out the changes in architecture over the course of 2025 and it looks like at this point, the changes for NVMe performance will be seamless and non-disruptive.

Also, the new S2D Campus Cluster looks like an interesting option as it provides additional resiliency benefits for those who want to protect their data across separate racks or buildings in the same campus.